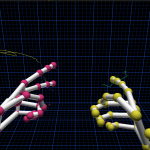

As partner of ASUS the Leap Motion uses the same emitted and reflected infrared light for tracking parts of the human body like the Asus Xtion Pro . Available since July 2013, the Leap Motion with about 90EUR is an inexpensive, but limited input device, which is optimized for tracking fingers and hands as illustrated in the following illustrations.

The main features include the tracking of two simultaneous hands with gesture recognition for all ten fingers. For distances between 10cm and 1m at daylight the device works reliably.

During my thesis, I have tested the existing ROS driver, which currently only supports one hand and was not able to provide 3D PointCloud data. In brief, the Leap Motion unfortunately is inappropriate for our project as their only use could be unreliable robot control by hand gestures.