The Arduino (or in some cases also Genuino) is a physical hard and software development plattform. During the recent years its developing environment grow to a useful and library rich developing platform. Because of that the esp8266 team created an conversion tool to its internal programming language lua.

Setting up the Arduino IDE for esp8266 programming is really easy. Just follow this small guide.

Step 1: Download the arduino IDE software

Go to the most recent version download page of the Arduino IDE. Please keep in mind that you’ll need at least version 1.6.x. In some cases it is not available at the packet repositories of your beloved Debian Distribution.

Click on Windows, Linux or Mac Version of your choice. We are going to use the Linux 64bit for further pictures.

Click on Windows, Linux or Mac Version of your choice. We are going to use the Linux 64bit for further pictures.

Extract the provided tar.gz. For windows or Mac please use a extraction software like 7zip, Winrar etc accordingly.

Extract the provided tar.gz. For windows or Mac please use a extraction software like 7zip, Winrar etc accordingly.

Please make sure the arduino.sh file in your directory is excecutable.

Please make sure the arduino.sh file in your directory is excecutable.

You also can use the terminal command:

You also can use the terminal command:

chmod +x -/arduino.sh

After that double click the application…

or use the terminal to start the IDE. This is also very useful to see possible errors after they occure while flashing the esp8266 from time to time.

or use the terminal to start the IDE. This is also very useful to see possible errors after they occure while flashing the esp8266 from time to time.

Et voila, your IDE should look somehow like this:

Step 2: Setting up the IDE to work with ESP8266 by board manager.

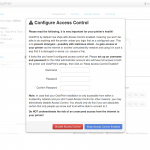

We need to add an additional url to the boards manager sources list. For that open File > Preferences

And add http://arduino.esp8266.com/stable/package_esp8266com_index.json to the Additional Boards Manager URLs.

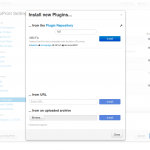

After that open Tools > Boards > Boards Manager and search for esp8266.

After that open Tools > Boards > Boards Manager and search for esp8266.

install the latest version:

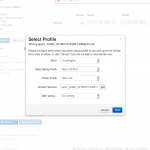

After that you should be able to select the Generic ESP Module board in Tools > Board

Congratulations! You are now able to compile esp8266 code from the arduino IDE.

Congratulations! You are now able to compile esp8266 code from the arduino IDE.

Step 3: Use the Examples to learn to code

This step is easy. Open File > Examples > ESP8266Wifi > WifiWebServer as a good starting point.

In the next post we will have a look at how to wire the ESP8266 up for an easy flashing.