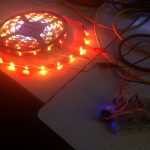

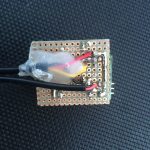

Harder than it looks but controlling an 5m led stripe using the esp8266 by the open pixel control protocol took me a night (and might be the reason for extra bad english as i write this post directly after it). But it’s real fun!

There are several ways to make the controller blink, the easiest way is shown here:

while true; do ( echo -en '\x00\x00\x02\xA6'; dd if=/dev/urandom bs=678 count=1 status=none ) | ncat --send-only --udp 172.22.99.155 2342; sleep 0.1; done

For the duration of infintiy, it sends the static header consisting of 4 bytes ( prio, command and checksum) followed by 8bit red 8bit green and 8bit blue for each led of the stripe. It gets the blinking values by asking the source of random in linux. It lacks a bit of white as my power source got to its limits, so if you reimplement this use 5V and 1A per 30 leds.

Another thing to mention is the data length field which are bytes 3-4 of the header or \x02\xA6 as in the command above. This length needs to equal the amount of leds times three, so in this example 226 Leds where controlled as the bytes in network order end up to be 678.

This results in that little animation:

Another possibility is to send these packets by a small python script like that:

import socket

import time

from struct import *

HOST = 'your-hostname'

PORT = 2342

colors = [(255,255,255), (255,0,0) ,(0,0,255), (0,255,0) ]

for color in colors:

print "sending color {} {} {}".format(color[0],color[1],color[2])

data = [pack('b',0),pack('b',0), pack('!h',678)];

for i in range(0,226):

data.append(pack('BBB',color[0],color[1],color[2]))

s = socket.socket(socket.AF_INET, socket.SOCK_DGRAM)

for i in range(0,1024):

s.sendto("".join(data),(HOST,PORT))

time.sleep(0.5)

s.close()

import pdb; pdb.set_trace()

Code for the controller at github.

For the webinterface I wrote a small external javascript file, which gets called by the esp8266 on most web requests. It fetches the temperature by json and visulizes it with d3.js in real time. The scales fit to the measured temperature and time automagically. It further is possible to display the site on multiple clients without loosing data or performance.

For the webinterface I wrote a small external javascript file, which gets called by the esp8266 on most web requests. It fetches the temperature by json and visulizes it with d3.js in real time. The scales fit to the measured temperature and time automagically. It further is possible to display the site on multiple clients without loosing data or performance.