ROS needs to know everything about the physics of a robot. It starts with dimension to avoid collusions – both with the outside world and the robot itself (e.g. if it is using two robot arms at once). Further it is relevant where the sensors are – or in my case where the [amazon &title=Asus Xtion&text=Asus Xtion] is located in relation to the robots base. Another interesting information are the robots joints. Its needed to drive the wheels and to rotate the camera. For all that, a detailed description and representation of the robot in a format that a computer understands is essential.

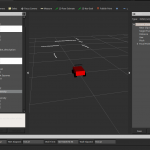

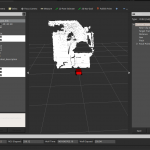

Today I’ve made a hugh step in the simulation field, so struggeling with the motors in the real world for the last few days doesn’t feel too bad – at least I can generate some nice pictures now:

- The new Xtion as part of the robot model. It has all camera links attached.

- Because now the robot know its parts, it is possible to show data and itself together – here with a laserscan generated from the xtion

- the complete point cloud

- a list of all avaiable ros topics so far

- the cubietruck is at 100% capacity while scanning with 1fps 😀

For me an interesting journey started, with a lot ups and downs – currently I am really excited where we will be in 18 weeks – because 2 weeks of my thesis already passed.