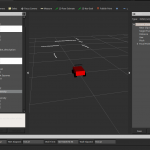

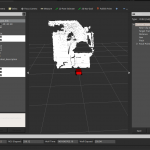

Most of my work depended on the efficient connection between the [amazon &title=Asus Xtion&text=Asus Xtion] and the [amazon &title=CubieTruck&text=CubieTruck] as a low cost laser scanner. As the [amazon &title=Asus Xtion&text=Asus Xtion] usually delivers 3D sensor_msgs/PointCloud data and most slamming algorithms need 2D sensor_msgs/LaserScan messages to work properly, we need to find a solution to this issue: depthimage_to_laserscan .

If you already managed to use the ros-indigo-openni2-camera and ros-indigo-openni2-launch you can use the following code:

<!-- this code originates from https://github.com/turtlebot/turtlebot/blob/hydro/turtlebot_bringup/launch/3dsensor.launch -->

<launch>

<!-- "camera" should uniquely identify the device. All topics are pushed down

into the "camera" namespace, and it is prepended to tf frame ids. -->

<arg name="camera" default="camera"/>

<arg name="publish_tf" default="true"/>

<!-- Factory-calibrated depth registration -->

<arg name="depth_registration" default="true"/>

<arg if="$(arg depth_registration)" name="depth" value="depth_registered" />

<arg unless="$(arg depth_registration)" name="depth" value="depth" />

<!-- Processing Modules -->

<arg name="rgb_processing" default="true"/>

<arg name="ir_processing" default="true"/>

<arg name="depth_processing" default="true"/>

<arg name="depth_registered_processing" default="true"/>

<arg name="disparity_processing" default="true"/>

<arg name="disparity_registered_processing" default="true"/>

<arg name="scan_processing" default="true"/>

<!-- Worker threads for the nodelet manager -->

<arg name="num_worker_threads" default="4" />

<!-- Laserscan topic -->

<arg name="scan_topic" default="scan"/>

<include file="$(find openni2_launch)/launch/openni2.launch">

<arg name="camera" value="$(arg camera)"/>

<arg name="publish_tf" value="$(arg publish_tf)"/>

<arg name="depth_registration" value="$(arg depth_registration)"/>

<arg name="num_worker_threads" value="$(arg num_worker_threads)" />

<!-- Processing Modules -->

<arg name="rgb_processing" value="$(arg rgb_processing)"/>

<arg name="ir_processing" value="$(arg ir_processing)"/>

<arg name="depth_processing" value="$(arg depth_processing)"/>

<arg name="depth_registered_processing" value="$(arg depth_registered_processing)"/>

<arg name="disparity_processing" value="$(arg disparity_processing)"/>

<arg name="disparity_registered_processing" value="$(arg disparity_registered_processing)"/>

</include>

<!-- Laserscan

This uses lazy subscribing, so will not activate until scan is requested.

-->

<group if="$(arg scan_processing)">

<node pkg="nodelet" type="nodelet" name="depthimage_to_laserscan" args="load depthimage_to_laserscan/DepthImageToLaserScanNodelet $(arg camera)/$(arg camera)_nodelet_manager">

<!-- Pixel rows to use to generate the laserscan. For each column, the scan will

return the minimum value for those pixels centered vertically in the image. -->

<param name="scan_height" value="10"/>

<param name="output_frame_id" value="/$(arg camera)_depth_frame"/>

<param name="range_min" value="0.45"/>

<remap from="image" to="$(arg camera)/$(arg depth)/image_raw"/>

<remap from="scan" to="$(arg scan_topic)"/>

<remap from="$(arg camera)/image" to="$(arg camera)/$(arg depth)/image_raw"/>

<remap from="$(arg camera)/scan" to="$(arg scan_topic)"/>

</node>

</group>

</launch>

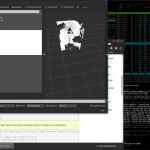

As you might see, the depthimage_to_laserscan gets initialized in a separate nodelet manager.

Nodelets are designed to provide a way to run multiple algorithms on a single machine, in a single process, without incurring copy costs when passing messages intraprocess. (Quote Wiki)

They hugely improve the performance of our 3D point clouds and allow significantly higher publishing rates.

An important property of an robot is the rate of data creation. A low rate influences most

higher algorithms leads to incorrect results. In most cases especially the depth sensors are

required to publish sufficient material to create detailed maps. The [amazon &title=Asus Xtion&text=Asus Xtion] Pro driver OpenNi2 and the ROS package openni2_camera offers multiple run modes which can be set by dynamic_reconfigure (I suggest using it in combination with rqt). Another essential option influencing performance is the data_skip parameter, which allows the system to skip a certain amount of pictures the hardware produces before loading them into memory and by that remarkably reduces computational load. It can be set to an integer value between zero, which means not to skip any frames at all, and ten, leading to every eleventh frame to be processed.

Performance Check

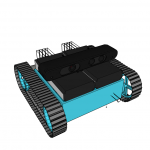

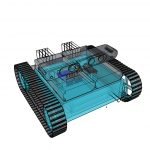

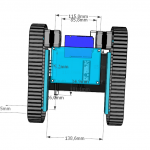

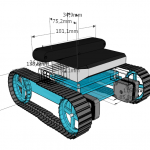

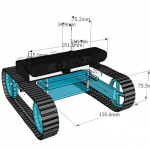

The different combinations of resolutions, maximum frequencies and the data_skip -parameter

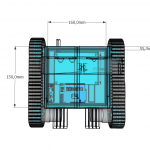

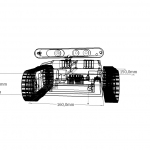

ran on the aMoSeRo (my low cost [amazon &title=CubieTruck&text=CubieTruck] robot) is illustrated in the table below. As it can be seen, especially the amount of frames that has to be processed per second highly influences the complete system.

In conlusion, the depthimage_to_laserscan package is really useful when working with low cost setups like depth sensors [amazon &title=Asus Xtion&text=Asus Xtion] or the [amazon &title=Kinect&text=Microsoft Kinect]. It furthermore is essential when interfacing SLAM algorithms.