UPDATE: see my step by step guide for depthimage_to_laserscan here.

PointClouds need a lot of processing and network traffic load. For 2D navigation LaserScans are a good option to decrease this loads, in my case below to 30% of the original.

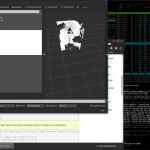

So after starting my customized openni2_launch (the launchers of openni_camera, and both ros drivers) with a custom Openni2 driver dir:

OPENNI2_DRIVERS_PATH=/usr/lib/OpenNI2/Drivers/ roslaunch /home/user/catkin_ws/src/robot_bringup/launch/openni2.launch

(OPENNI2_DRIVERS_PATH fixes all issues with the camera driver nodelet)

its possible to process this down to a /scan topic by:

rosrun depthimage_to_laserscan depthimage_to_laserscan image:=/camera/depth/image_raw

or directly in a launch file using the handy nodelet manager:

<group>

<node pkg="nodelet" type="nodelet" name="depthimage_to_laserscan" args="load depthimage_to_laserscan/DepthImageToLaserScanNodelet $(arg camera)/$(arg camera)_nodelet_manager">

<param name="scan_height" value="10"/>

<param name="output_frame_id" value="/$(arg camera)_depth_frame"/>

<param name="range_min" value="0.45"/>

<remap from="image" to="$(arg camera)/$(arg depth)/image_raw"/>

<remap from="scan" to="$(arg scan_topic)"/>

<remap from="$(arg camera)/image" to="$(arg camera)/$(arg depth)/image_raw"/>

<remap from="$(arg camera)/scan" to="$(arg scan_topic)"/>

</node>

</group>

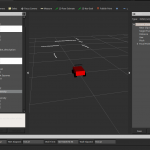

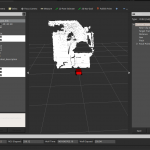

After that it is possible to visualize the new topic using rviz getting something like that:

![Screenshot[amazon &title=CubieTruck&text=CubieTruck]](http://defendtheplanet.net/wp-content/uploads/2014/05/Screenshot[amazon &title=CubieTruck&text=CubieTruck]-1024x640.png)